V6.8.0 (June 2025 Release)

At Qyrus, our commitment is to continuously advance our platform, equipping your teams with cutting-edge tools, simplifying complex testing processes, and making the path to quality clearer and more accessible. June has been another month of dedicated development, bringing forth a wave of innovative features and critical enhancements designed to offer you expanded data connectivity, deeper AI-driven insights, and more refined control across your entire testing journey. These updates are crafted to significantly boost your efficiency and provide unparalleled visibility into your applications.

Let’s dive into the latest capabilities we’ve integrated into the Qyrus platform this June!

Improvement

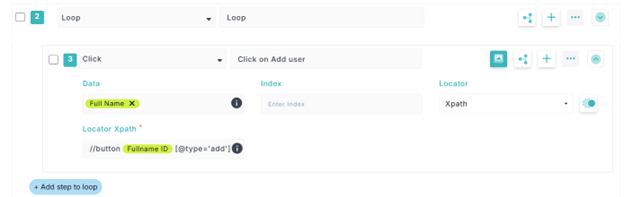

Loop Smarter: Combine Static & Dynamic Data in Your Web Locators!

The Problem:

Previously, users encountered difficulties when trying to automate interactions with dynamic web elements, like tables or lists, within a loop. Crafting locators that could adapt to changing element attributes or indices during each iteration was cumbersome, often requiring users to code complex workarounds or limiting the ability to create truly flexible, data-driven tests for these common web structures.

The Fix:

We have enhanced our web testing capabilities by enabling the combination of both dynamic (variable data, e.g., from a loop’s iteration or a data source) and static (user-defined text) input within the locator field for steps inside a loop. This allows for the construction of adaptive locators on the fly.

How does it help:

This provides significantly more flexibility in web test automation. Users can now easily iterate over dynamic elements such as rows in a table or items in a list where part of the locator remains constant while another part changes with each iteration. This enables the creation of more robust, data-driven locators, simplifying the automation of complex web scenarios and making tests easier to maintain.

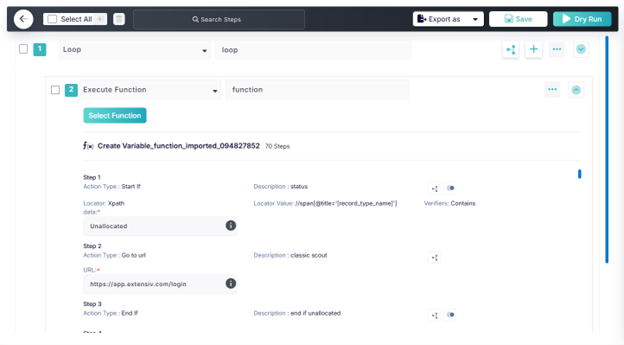

Improvement

Enhanced Run Visibility for Web Tests

The Problem:

Previously, when automating repetitive sequences of actions within a loop for web testing, users might have had to duplicate those steps for each iteration or for similar loops. This could lead to longer, more complex test scripts that were harder to maintain and less efficient, especially when a common set of operations needed to be performed repeatedly within the loop’s context.

The Fix:

We have enhanced our Web Testing capabilities by introducing support for executing functions within a loop. Users can now incorporate the “Execute Function” action type directly inside a loop, allowing them to call pre-defined, reusable functions during each iteration of the loop.

How does it help:

This significantly boosts the modularity, reusability, and maintainability of web test scripts. Users can encapsulate common or complex sequences of actions into a single function and then call this function repeatedly within a loop, or across different loops. This leads to cleaner, more concise test scripts, reduces redundancy, simplifies updates, and ultimately makes automated web tests more efficient and easier to manage.

New Feature

New MongoDB Connector: Unlocking NoSQL & Semi-Structured Data Testing

The Problem:

The data testing platform previously lacked direct support for semi-structured and NoSQL data sources like MongoDB. This meant users could not easily ingest, compare, or evaluate data from MongoDB instances, nor could they readily perform structured and nested validations on real-world JSON-like datasets within the platform.

The Fix:

A MongoDB Connector is being introduced to the data testing platform. This connector will allow users to connect to MongoDB instances and ingest data specifically for use in Compare and Evaluate jobs.

How does it help:

This new connector enables users to work with semi-structured data from MongoDB, allowing for structured and nested validations on JSON-like datasets. It serves as a foundation for testing nested documents and supports advanced use cases such as comparing MongoDB documents against other data sources (like flat files or relational tables), evaluating fields using various assertion types, and integrating with an upcoming nested comparison framework.

Improvement

Dry Run Delight: SQL Connectors Enhanced with LIMIT & OFFSET!

The Problem:

Previously, when configuring SQL connectors for tables or queries, users might not have had a direct way to restrict the number of rows fetched for initial testing or validation purposes. This meant that to test data mappings, transformations, or query logic, they might have had to process entire datasets, which could be slow and resource-intensive, especially during the development and dry run phases.

The Fix:

We have improved our SQL connectors to now support the use of LIMIT and OFFSET clauses directly within the table and query configuration settings. This allows users to specify the maximum number of rows to retrieve (LIMIT) and the starting point for data retrieval (OFFSET) when setting up their data connections.

How does it help:

This enhancement significantly improves efficiency, particularly for testing and validation. Users can now easily configure “Dry Run” setups to fetch and process only a small, manageable portion of their data. This enables them to quickly validate their data mappings, transformations, and query logic without the overhead of processing full datasets, leading to faster iteration cycles, reduced resource consumption during development, and more agile data pipeline testing.

Improvement

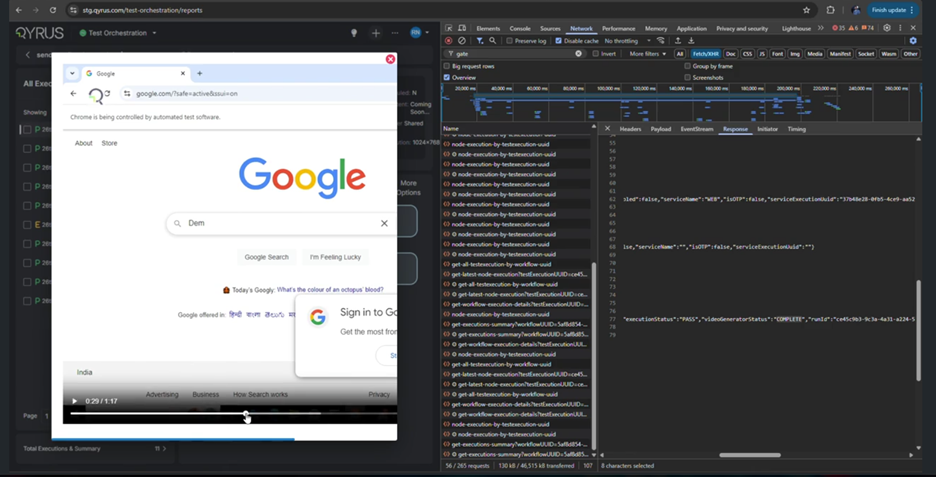

Unified Workflow Playback: Single Video for Web & Mobile Executions!

The Problem:

Previously, when reviewing test executions for workflows in Test Orchestration that involved both Web and Mobile services, users might have had to access and manage separate video recordings for each service. This fragmented approach could make it cumbersome to get a holistic, end-to-end report of the entire workflow.

The Fix:

We’ve introduced a significant enhancement to our consolidated reporting in Test Orchestration: a single video per workflow execution. This means that for workflows involving both Web and Mobile services, a single, consolidated video recording will be generated, seamlessly combining the visual recordings from both service interactions into one comprehensive playback.

How does it help:

This feature provides users with a unified and complete visual record of their entire workflow execution from start to finish, all in one place. It greatly simplifies the review process, making it easier to understand the full user journey across different platforms (Web and Mobile).

Improvement

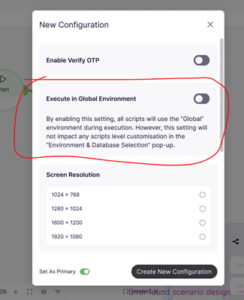

Streamlined Test Setup: Run All Nodes in a Global Environment

The Problem:

Previously, users were required to select an environment for each individual node within their test configurations. While this offers granular control for complex multi-environment testing scenarios, it proved to be an inconvenience and time-consuming for users who primarily operate with a single, consistent environment (like a global or default one) across all nodes, or those who simply wanted a quick way to run everything against a standard setup.

The Fix:

To address this, we have added a Global Environment toggle. When activated, this option will allow users to run all nodes in their test configuration using the respective “Global Environment” defined for each service, bypassing the need for individual environment selection for each node.

How does it help:

This enhancement will significantly simplify and speed up the test configuration process for many users. It offers a convenient one-click solution for those who wish to execute all their test nodes against a pre-defined global or default environment, reducing repetitive manual setup and saving valuable time. This leads to a more streamlined user experience, especially for large test suites or when a uniform environment strategy is applied.

Improvement

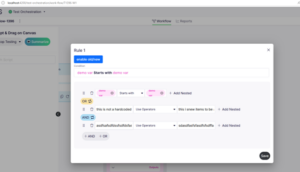

Branch Hub Gets Literal: Compare Directly with Static String Values!

The Problem:

Previously, when defining conditional rules within the Branch Hub, users might have faced limitations or complexities if they needed to compare a variable directly against a specific, fixed string value (a string literal). This could require workarounds, such as pre-defining the static string as another variable, making the rule creation process less intuitive or direct for common scenarios like checking if a status variable equals “Completed” or a type field matches “Urgent.”

The Fix:

We have enhanced Branch Hub to directly support the use of static string literals in comparison operations. This means users can now easily define rules by comparing a variable with a fixed string value directly entered into the condition, for example, Variable_Name == “Your Static Text”.

How does it help:

This update significantly simplifies the process of creating and managing rules in Branch Hub. Users can now more intuitively and directly define conditions based on specific text values without needing intermediate steps. This enhances the flexibility and clarity of rule definitions, making it easier to implement precise conditional logic for workflows.

Improvement

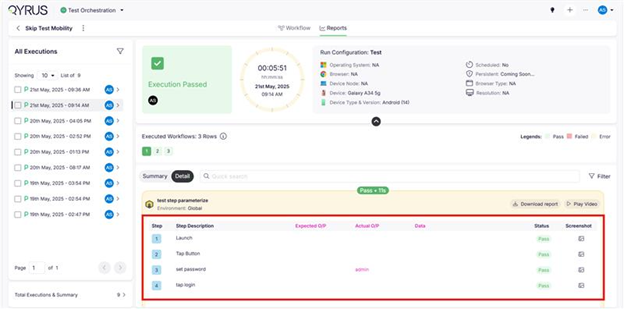

Enhanced Control: Skip Support Added for Parameterized Mobility Tests

The Problem:

Clients were unable to skip individual test cases within their parameterized Mobility test executions when running them through Test Orchestration. This lack of control meant all defined iterations of a parameterized test would execute, even if some were not relevant for a specific test run.

The Fix:

A validation has been added to the Mobility Framework specifically for Test Orchestration executions. This validation checks for the presence of a tilde (‘~’) character in parameterized test cases. If this character is detected, that particular parameterized step (test case or iteration) is skipped during execution and is also excluded from the test report.

How does it help:

This feature allows clients to customize their parameterized test executions more effectively within Test Orchestration. They can now selectively exclude specific test cases or iterations from a run, leading to more focused testing, faster execution times for relevant scenarios, and cleaner reports that only reflect the desired test scope.

Improvement

Refine Visual Comparisons: Introducing ‘Verify Screen’, Region Ignoring & Pixel Pass

![]()

The Problem:

Clients previously could not refine their visual testing comparisons by instructing the system to ignore specific, often dynamic or irrelevant, regions within device screenshots. This limitation could lead to inaccurate comparisons or an inability to focus solely on the critical visual elements of an application.

The Fix:

To address this, a new ‘Verify Screen’ action type has been introduced. This action allows users to limit visual comparisons to specific test steps within their script and, crucially, enables them to define and ignore specific regions within those device screenshots. Furthermore, new global settings have been added for Visual Testing executions: ‘Ignore Header,’ ‘Ignore Footer,’ and ‘Pixel Pass.’ The ‘Pixel Pass’ setting allows users to define an acceptable threshold for pixel differences in comparisons. These global settings are applied to all screenshots within a given visual testing execution.

How does it help:

These improvements provide users with substantially more control and customization over their visual testing comparisons. They can now make visual checks more precise by excluding irrelevant areas, consistently ignoring common elements like headers or footers, and setting tolerance levels for minor pixel variations.

New Feature

Testing the True Offline: Simulate Zero Network Speed on Android Devices

The Problem:

Accurately testing an application’s behavior when an Android device has absolutely no internet connectivity can be challenging. Standard network shaping tools might not offer a straightforward “zero speed” setting, and alternative methods for simulating a complete lack of connection might not be consistently reliable or easy to configure. This makes it difficult for developers and testers to confidently verify offline functionality, error handling for no-internet scenarios, and the overall user experience when a device is truly offline.

The Fix:

We integrated a dedicated network profile, “Zero”, ensuring users can easily select and apply this condition.

How does it help:

This feature will enable users to reliably and accurately simulate their Android application’s behavior when the device has no internet access. It allows for thorough testing of critical offline functionalities, such as data caching, offline content access, graceful error messaging, and how the app recovers when connectivity is restored.

New Feature

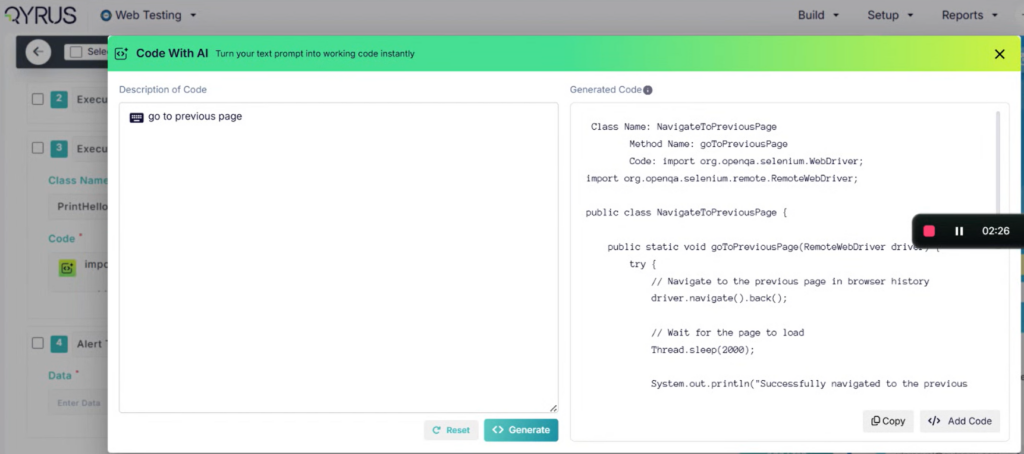

AI Supercharges Qyrus Recorder (FKA Encapsulate): AI Phase 1 is Live

The Problem:

Previously, creating and expanding tests with Qyrus Recorder (formerly known as Encapsulate), while effective, might have involved primarily manual recording processes. Users looking to define tests using natural language descriptions, automatically generate diverse test scenarios from existing ones, or ensure complete exports without manual touch-ups faced limitations.

The Fix:

The AI Phase 1 has been completed, integrating advanced QTP (Qyrus Test Persona/AI) capabilities directly into Qyrus Recorder (FKA Encapsulate). This brings several key enhancements:

- Users can now create and record tests within Qyrus Recorder simply by providing step descriptions, which the AI interprets to build the test flow.

- Users can leverage AI to automatically generate additional and relevant test scenarios based on the current test context, helping to easily expand test coverage.

- When exporting test steps from QTP, the “Go to Url” step will now automatically include the target URL, making exported tests more complete and ready to use.

How does it help:

This first phase of AI-powered enhancements significantly enhances the test creation process with Qyrus Recorder by:

- Offering Intuitive Test Design: Allowing tests to be built from natural language descriptions caters to diverse user preferences and can speed up the initial articulation of test flows.

- Boosting Test Coverage Effortlessly: AI-driven scenario generation helps users quickly identify and create a wider array of test cases, including edge cases, with minimal manual effort directly within the recorder.

- Streamlining Workflows: Ensuring URLs are included in exported “Go to Url” steps saves time and reduces the need for manual post-export modifications, making tests more portable and immediately actionable.

Heads Up! In-App Alerts for Deployments & New Feature Highlights

Improvement

The Problem:

Keeping users promptly informed about important events like ongoing system deployments (which might temporarily affect service) or the release of exciting new features could be challenging using traditional communication methods alone. Users might miss external announcements, leading to potential confusion during maintenance periods or slower adoption of newly added functionalities.

The Fix:

We have integrated a tool that enables us to display timely popups or snippets directly to users within the application. These in-app notifications will be used to proactively inform users about ongoing deployments and to highlight when new features have been added to the platform.

How will it help?

This new communication channel ensures users receive critical information and exciting news directly where they are working – within the application itself. It will improve awareness of ongoing deployments, potentially reducing confusion or support queries during maintenance windows.

Other Enhancements

- We have resolved underlying issues concerning the lifecycle management of dedicated browser frameworks. This involves ensuring these frameworks are now correctly and reliably allocated when needed, properly deallocated after use to free up resources, and effectively renewed when required, leading to a more robust management process.

- We have identified and resolved the problem that was hindering the system user re-invitation process. The fix ensures that when a system user is reinvited, they now properly receive the new invitation and can respond to it as expected, allowing them to join or re-access the system.

- We have corrected the functionality responsible for displaying the assigned team list in the user profile section. The system now accurately fetches and presents the user-to-team mappings, ensuring that the teams listed in a user’s profile are a true representation of their current assignments.

- We have now added the download options for Qloud-Bridge and Q-Spy (for various operating systems) to the new navbar. These essential links are now correctly integrated and visible within the main navigation structure.

- We have standardized the “view password” option across all relevant password set and password reset pages. This ensures that the functionality to toggle password visibility is consistently present and functions uniformly wherever users are required to create or update their passwords.

Virtual User Balance (VUB) – Test Your APIs Like Real Users

The Problem:

Traditional API testing often focuses on a single interaction or low-volume scenarios. But real-world usage rarely works that way. When hundreds or thousands of users interact with your system at once, you need to know whether your API will hold up—or fall apart. Until now, simulating realistic traffic patterns and usage concurrency required custom setups or third-party integrations.

The Fix:

With the new Virtual User Balance (VUB) system, qAPI now allows you to simulate up to 1,000 virtual users interacting with your API simultaneously—no external tools or custom load scripts required. Each project receives a monthly allocation of “virtual user credits” that can be used across all your test suites and environments.

Whether you’re stress testing for scale or validating business workflows under pressure, you can now launch realistic, high-volume test scenarios with a single click.

How will it help?

- Test Like It’s Production: Simulate real-world concurrency and see how your API behaves under pressure.

- One Click, No Setup: No need to build custom load test infrastructure—just launch and go.

- Shared Monthly Credits: Use your virtual user balance across all projects and teams efficiently.

Collaboration – Work Together on API Projects

The Problem:

Previously, API testing in qAPI was a solo experience. Users couldn’t easily collaborate or share resources, which created silos and duplicated work. Teams working on the same API had to manage separate environments, data sets, and test runs—slowing down delivery and increasing friction.

The Fix:

With the new Shared Workspaces feature, qAPI users can now invite teammates to collaborate on API projects in real time. Projects can still remain private, but when shared, everyone in the workspace can access the same collections, tests, environments, and virtual user credits.

It’s a seamless way to go from individual testing to true team collaboration—similar to switching from emailing files to working together in Google Docs.

How will it help?

- Faster Collaboration: Multiple users can contribute to the same project, in the same environment.

- Shared Resources: Pool test credits and environments across the team for better efficiency.

- Flexible Privacy: Keep projects private when needed or open them up for collaboration.

cURL Code Snippet – Copy and Paste Ready Code

The Problem:

Even after testing an API successfully, developers often had to manually recreate the same request logic in their application code—copying headers, payloads, and endpoints by hand. This was time-consuming and error-prone, especially for more complex APIs.

The Fix:

qAPI now lets you instantly generate a copy-paste-ready cURL snippet for any API request you’ve built and tested. This allows developers to take working API calls and drop them directly into scripts, documentation, or terminals—without having to rewrite a single line.

Support for additional languages and code frameworks is coming soon.

How will it help?

- Save Developer Time: No more recreating tested calls—just copy and go.

- Reduce Errors: Eliminate the risk of typos or config mismatches in code.

- Boost Developer Velocity: Get working API logic into your application faster.

Beautify – Make Messy Code Look Clean

The Problem:

APIs return data in formats like JSON, XML, or GraphQL—but the output is often compressed, unformatted, and hard to read. Manually formatting these responses takes time and increases the risk of missing bugs or misinterpreting structure.

The Fix:

qAPI now includes a Beautify feature that automatically reformats messy API request or response bodies into clean, readable, indented formats. It detects the content type (JSON, XML, GraphQL), and only activates when there’s something to format. If you’re working with plain text or already clean content, it stays out of the way.

How will it help?

- Readability First: Instantly make complex API responses human readable.

- Smarter Formatting: Only runs when needed and adapts to the data type.

Ready to Leverage June’s Innovations?

This June, Qyrus is putting enhanced connectivity, deeper AI intelligence, refined user control, and greater operational efficiency directly at your fingertips. From seamlessly integrating with NoSQL databases like MongoDB and generating complex test code from natural language with our advanced AI, to gaining precise control over visual comparisons with new ignore-region capabilities, every new feature is engineered to elevate your testing strategy. We are committed to providing a unified platform that not only adapts to your evolving needs but also streamlines your critical processes, empowering you to release high-quality software with greater speed and confidence.

Eager to explore how these June advancements can transform your testing efforts? The best way to appreciate the Qyrus difference is to experience these new capabilities directly.

Ready to dive deeper or get started?