Qyrus Vs. Competition: Why Unified Data Testing is the Strategic Imperative for 2026

Zillow’s iBuying division collapsed after losing a staggering $881 million on housing models trained on inconsistent data.

This catastrophe proves that even the most advanced machine learning fails when built on a foundation of flawed information. Stanford AI Professor Andrew Ng captures the urgency: “If 80 percent of our work is data preparation, then ensuring data quality is the most critical task”.

Organizations now face an average annual loss of $15 million due to poor information quality. Most enterprises struggle with these costs because they lack sophisticated data quality testing tools to catch errors early.

Relying on manual checks in high-speed pipelines creates massive blind spots that invite financial disasters. Professional data quality validation in ETL processes must move beyond a reactive “firefighting” mindset. Precision requires a proactive strategy that protects your capital and restores trust in your digital insights.

The 1,000x Multiplier: Why Your Budget Cannot Survive Fragmented Quality

Ignoring quality creates a financial sinkhole that scales with terrifying speed. The industry follows a brutal economic principle known as the Rule of 100. A single defect that costs $100 to fix during the requirements phase balloons into a monster as it moves through your pipeline. That same bug costs $1,000 during coding and $10,000 during system integration. If it escapes to User Acceptance Testing, the bill hits $50,000. Once that flaw goes live in production, you face a recovery cost of $100,000 or more.

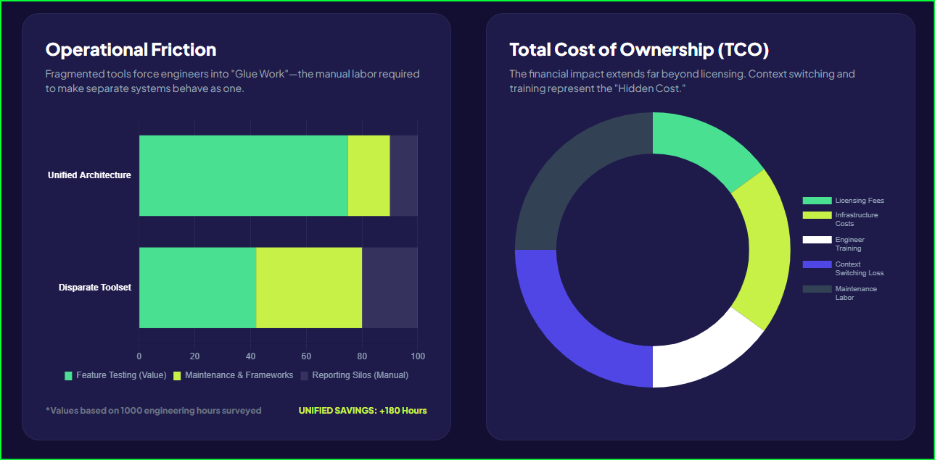

Enterprises currently hemorrhage capital through maintenance overhead. Industry surveys report that keeping existing tests functional consumes up to 50% of the total test automation budget and 60-70% of resources. This means you spend most of your resources just maintaining the status quo instead of building new value. Fragmented ETL testing automation tools aggravate this problem by forcing engineers to update multiple disconnected scripts every time a schema changes.

The financial contrast is stark. Managing disparate tools for a 50-person QA team costs an average of $4.3 million annually, according to our estimates. Switching to a unified platform reduces this cost to $2.1 million—a 51% reduction in total expenditure.

Breakdown of Annual Costs (50-Person Team)

| Cost Category | Disparate Tools | Unified Platform | Annual Savings |

| Personnel & Maintenance | $3,500,000 | $1,750,000 | $1,750,000 (50%) |

| Infrastructure | $500,000 | $250,000 | $250,000 (50%) |

| Tool Licenses | $200,000 | $75,000 | $125,000 (62.5%) |

| Training & Certification | $100,000 | $50,000 | $50,000 (50%) |

| Total Annual Cost | $4,300,000 | $2,125,000 | $2,175,000 (51%) |

Implementing a robust ETL data testing framework allows you to stop paying the “Fragmentation Tax” and start investing in innovation. Without automated data quality checks, your organization remains vulnerable to the exponential costs of escaped defects.

Tool Sprawl is the Silent Productivity Killer in Your Pipeline

Fragmented workflows force your engineers to act as human integration buses. When you use separate platforms for web, mobile, and APIs, your team toggles between applications 1,200+ times daily. This constant context switching creates a massive cognitive tax, slashing productivity by 20% to 80%. For a ten-person team, this translates to 10 to 20 hours of lost work every single day.

Disconnected ETL testing automation tools also create dangerous blind spots. About 40% of production incidents stem from untested interactions between different layers of the software stack. Siloed suites often miss these UI-to-API mismatches because they only validate one piece of the puzzle at a time. Furthermore, data corruption in multi-step flows accounts for 25% of production bugs. Without an integrated ETL data testing framework, your team cannot verify a complete journey from the front end to the database.

Fragility in your CI/CD pipeline often leads to the “Pink Build” phenomenon. This happens when builds fail due to flaky tooling rather than actual code defects, causing engineers to ignore red flags. Maintaining these custom integrations costs an additional 10% to 20% of your initial license fees every year. To regain velocity, you must move toward automated data quality checks that run within a single, unified interface. Consolidation allows you to replace multiple expensive data quality testing tools with a platform that delivers data quality validation in ETL across the entire enterprise.

Sifting Through the Contenders in the Quality Arena

Choosing the right partner for your data strategy requires a clear view of the current market. Every organization has unique needs, but the goal remains the same: eliminating defects before they poison your decision-making. While specialized tools offer depth in specific areas, Qyrus takes a different path by providing a unified TestOS that handles web, mobile, API, and data testing within a single ecosystem.

Tricentis

Tricentis currently dominates the enterprise space with an estimated annual recurring revenue of $400-$425 million. It maintains a massive footprint, serving over 60% of the Fortune 500. Organizations deep in the SAP ecosystem often choose Tricentis for its specialized integration and model-based automation. However, its premium pricing and high complexity can feel like overkill for teams seeking agility.

Read the full breakdown: Qyrus vs. Tricentis: Enterprise Scale vs. Unified Agility

QuerySurge

If your primary concern is the sheer variety of data sources, QuerySurge stands out with over 200 connectors. It functions primarily as a specialist for data warehouse and ETL validation. While it offers the strongest DevOps for Data capabilities with 60+ API calls, it lacks the ability to test the UI and mobile layers that actually generate that data.

Read the full breakdown: Qyrus vs. QuerySurge: Specialist Connectivity vs. Full-Stack Coverage

iCEDQ

iCEDQ focuses on high-volume monitoring and rules-based automated data quality checks. Its in-memory engine can process billions of records, making it a favorite for teams with massive production monitoring requirements. Despite its power, a steeper learning curve and a lack of modern generative AI features may slow down teams trying to shift quality left.

Read the full breakdown: Qyrus vs. iCEDQ: Shifting Quality Left in the DataOps Pipeline

Datagaps

Datagaps offers a visual builder for ETL testing automation tools and maintains a strong partnership with the Informatica ecosystem. It excels at baselining for incremental ETL and supporting cloud data platforms. However, it currently possesses fewer enterprise integrations and a less mature AI feature set than more unified data quality testing tools.

Read the full breakdown: Qyrus vs. Datagaps: Modernizing Quality for Cloud-Native Data

Informatica Data Validation

Informatica remains a global leader in data management, with a total revenue of approximately $1.6 billion. Its data validation module provides a natural extension for organizations already using their broader suite for data quality validation in ETL.

While these specialists solve pieces of the puzzle, Qyrus delivers a comprehensive ETL data testing framework that bridges the gap between your applications and your data.

The End of Guesswork: Scaling Data Trust with Unified Intelligence

Qyrus redefines the potential of modern data quality testing tools by replacing fragmented workflows with a single, unified TestOS. This platform allows your team to validate information across the entire software stack—Web, Mobile, API, and Data—without writing a single line of code. Instead of wrestling with brittle scripts that break during every update, engineers use a visual designer to build a resilient ETL data testing framework.

The platform operates through a powerful “Compare and Evaluate” engine that reconciles millions of records between heterogeneous sources in under a minute. For deeper analysis, Qyrus performs automated data quality checks on row counts, schema types, and custom business logic using sophisticated Lambda functions. This level of granularity ensures that your data quality validation in ETL remains airtight, even as your data volume explodes.

Qyrus also future-proofs your organization for the next generation of automation: Agentic AI. While disparate tools create data silos that blind AI agents, Qyrus provides the unified context these agents need to perform autonomous root-cause analysis and self-healing. By leveraging Nova AI to identify validation patterns automatically, your team can build test cases 70% faster than traditional ETL testing automation tools allow. The results are definitive: case studies show 60% faster testing cycles and 100% accuracy with zero oversight errors.

The 45-Day Detox: Purging Pipeline Pollution and Reclaiming Truth

Transforming a quality strategy requires a structured path rather than a blind leap. Most enterprises hesitate to move away from legacy ETL testing automation tools because the migration feels overwhelming. However, a phased transition minimizes risk while delivering immediate visibility into your pipeline health. Organizations adopting unified platforms see a significant financial turnaround, with total benefits often reaching more than 200% over a three-year period.

The first 30 days focus on discovery within a zero-configuration sandbox. You connect directly to your existing sources and process a staggering 10 million rows per minute to expose critical flaws. This phase replaces manual data quality validation in ETL with high-speed automated data quality checks that provide instant feedback on your data health. Your team focuses on validation results instead of wrestling with infrastructure or complex configurations.

Following discovery, a two-week Proof of Concept (POC) deepens your insights. During this sprint, you build an ETL data testing framework tailored to your unique business logic and complex transformations. You generate detailed differential reports to pinpoint every discrepancy for rapid remediation.

Finally, you scale these data quality testing tools across the entire enterprise. Seamless integration into your CI/CD pipelines ensures that every code commit or deployment triggers a rigorous validation. This automated approach reduces manual testing labor by 60%, allowing your engineers to focus on innovation rather than maintenance.

The Strategic Fork: Choosing Between Technical Debt and Data Integrity

The decision to modernize your quality stack is no longer just a technical choice; it defines your organization’s ability to compete in a data-first economy.

Continuing with a patchwork of disconnected ETL testing automation tools ensures that technical debt will eventually outpace your innovation. Leaders who embrace a unified approach fundamentally restructure their economic outlook.

This transition effectively cuts your annual testing costs by 51% by eliminating redundant licenses and infrastructure overhead. More importantly, it liberates your engineering talent from the drudgery of tool maintenance and the “Fragmentation Tax” that slows down every release.

By implementing an integrated ETL data testing framework, you ensure that data quality validation in ETL becomes a silent, automated safeguard rather than a constant bottleneck. Proactive automated data quality checks provide the unshakeable foundation of truth required for trustworthy AI and precision analytics.

The era of guessing is over.

You can now replace uncertainty with a definitive “TestOS” that protects your bottom line and empowers your team to move with absolute confidence.

Your journey toward data integrity starts with a single strategic pivot. Contact us today!