AI Testing: Are You Buying a Feature or Building a New Department?

The conversation around quality assurance has changed because it has to. With developers spending up to half their time on bug fixing, the focus is no longer on simply writing better scripts. You now face a strategic choice that will define your team’s velocity, cost, and focus for years—a choice that determines whether quality assurance remains a cost center or becomes a critical value driver.

This choice boils down to a simple, yet profound, question: Do you buy a ready-made AI testing platform, or do you build one? This is not just a technical decision; it is a business one. Poor software quality costs the U.S. economy a staggering $2.41 trillion annually. The stakes are immense, as research shows 88% of online consumers are less likely to return to a site after a bad experience.

On one side, we have the “Buy” approach, embodied by all-in-one, no-code platforms like Qyrus. They promise immediate value and an AI-driven experience straight out of the box. On the other side is the “Build” approach—a powerful, customizable solution assembled in-house. This involves using a best-in-class open-source framework like Playwright and integrating it with an AI agent through the Model Context Protocol (MCP), creating what we can call a Playwright-MCP system. This path offers incredible control but demands a significant investment in engineering and maintenance.

This analysis dissects that decision, moving beyond the sales pitches to uncover real-world trade-offs in speed, cost, and long-term viability.

The ‘Build’ Vision: Engineering Your Edge with Playwright MCP

The appeal of the “Build” approach begins with its foundation: Playwright. This is not just another testing framework; its very architecture gives it a distinct advantage for modern web applications. However, this power comes with the responsibility of building and maintaining not just the tests, but the entire ecosystem that supports them.

Playwright: A Modern Foundation for Resilient Automation

Playwright runs tests out-of-process and communicates with browsers through native protocols, which provides deep, isolated control and eliminates an entire class of limitations common in older tools. This design directly addresses the most persistent headache in test automation: timing-related flakiness. The framework automatically waits for elements to be actionable before performing operations, removing the need for artificial timeouts. However, it does not solve test brittleness; when UI locators change during a redesign, engineers are still required to manually hunt down and update the affected scripts.

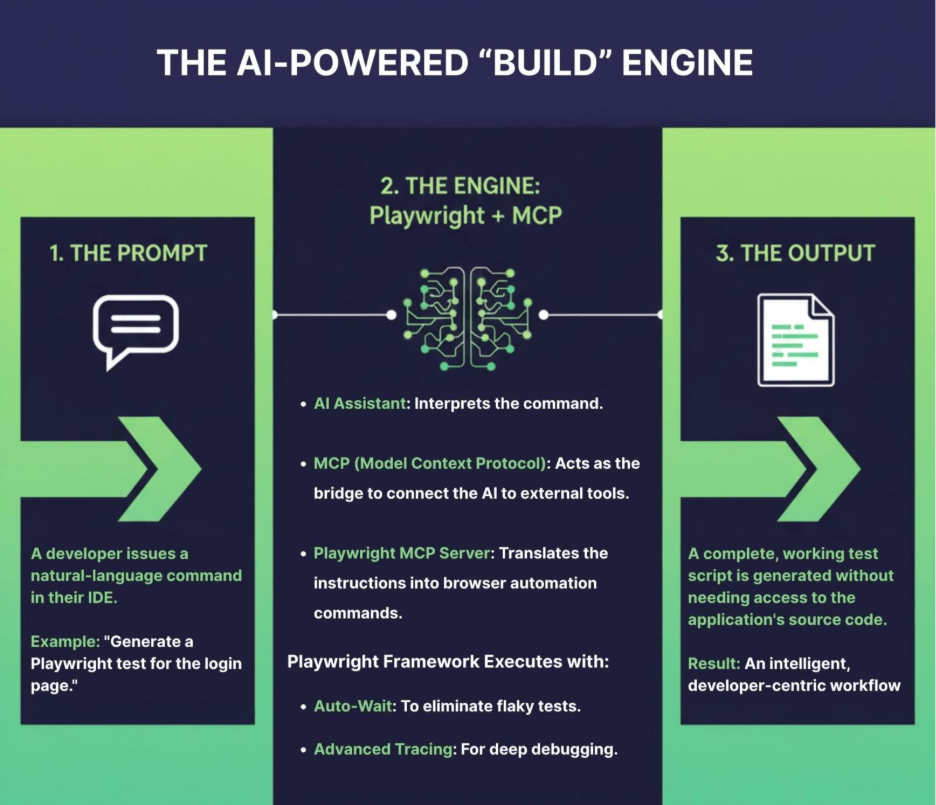

MCP: Turning AI into an Active Collaborator

This powerful automation engine is then supercharged by the Model Context Protocol (MCP). MCP is an enterprise-wide standard that transforms AI assistants from simple code generators into active participants in the development lifecycle. It creates a bridge, allowing an AI to connect with and perform actions on external tools and data sources. This enables a developer to issue a natural language command like “check the status of my Azure storage accounts” and have the AI execute the task directly from the IDE. Microsoft has heavily invested in this ecosystem, releasing over ten specialized MCP servers for everything from Azure to GitHub, creating an interoperable environment.

Synergy in Action: The Playwright MCP Server

The synergy between these two technologies comes to life with the Playwright MCP Server. This component acts as the definitive link, allowing an AI agent to drive web browsers to perform complex testing and data extraction tasks. The practical applications are profound. An engineer can generate a complete Playwright test for a live website simply by instructing the AI, which then explores the page structure and generates a fully working script without ever needing access to the application’s source code. This core capability is so foundational that it powers the web browsing functionality of GitHub Copilot’s Coding Agent. Whether a team wants to create a custom agent or integrate a Claude MCP workflow, this model provides the blueprint for a highly customized and intelligent automation system.

The Hidden Responsibilities: More Than Just a Framework

Adopting a Playwright-MCP system means accepting the role of a systems integrator. Beyond the framework itself, a team must also build and manage a scalable test execution grid for cross-browser testing. They must integrate and maintain separate, third-party tools for comprehensive reporting and visual regression testing. And critically, this entire stack is accessible only to those with deep coding expertise, creating a silo that excludes business analysts and manual QA from the automation process.

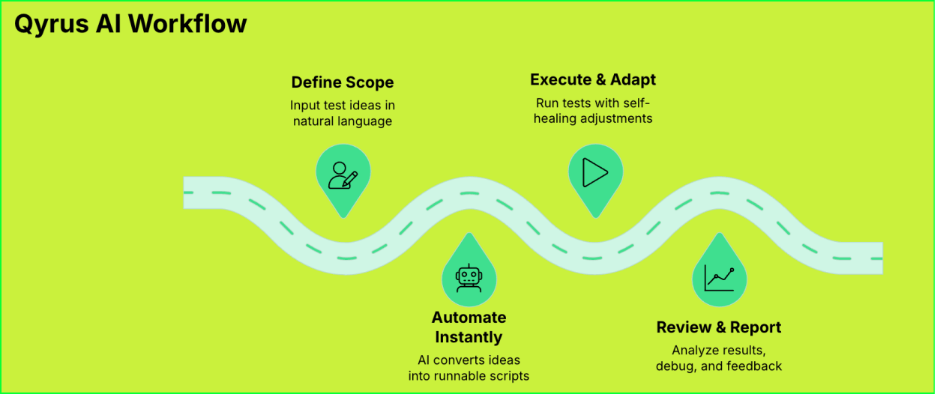

The ‘Buy’ Approach: Gaining an AI Co-Pilot, Not a Second Job

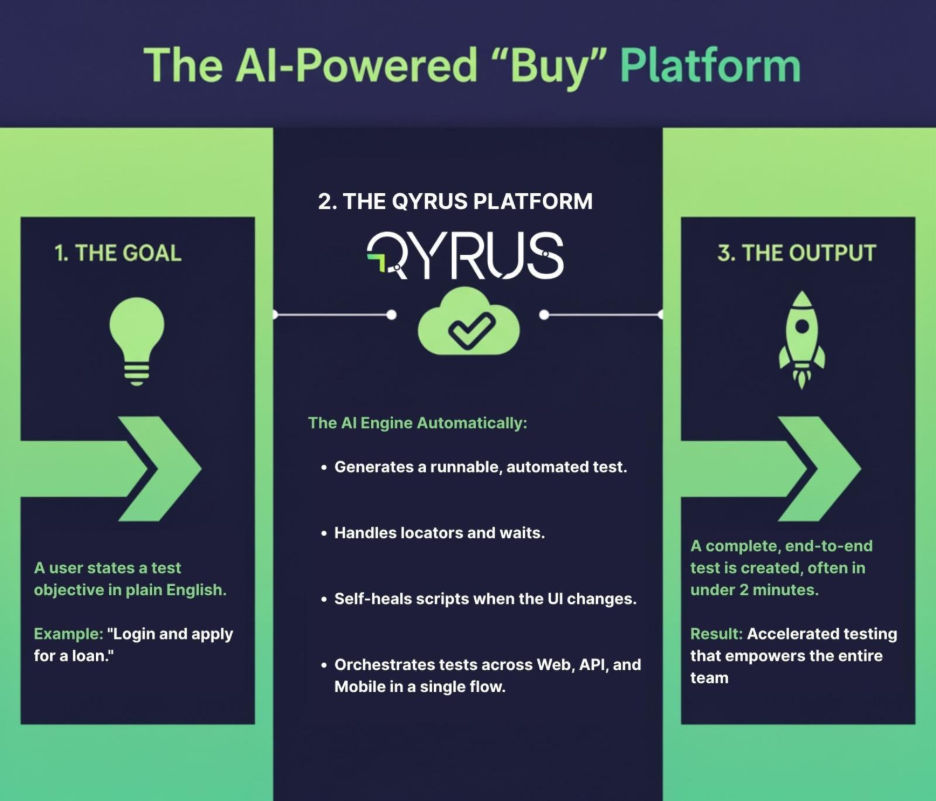

The “Buy” approach presents a fundamentally different philosophy: AI should be a readily available feature that reduces workload, not a separate engineering project that adds to it. This is the core of a platform like Qyrus, which integrates AI-driven capabilities directly into a unified workflow, eliminating the hidden costs and complexities of a DIY stack.

Natural Language to Test Automation

With Qyrus’ Quick Test Plan (QTP) AI, a user can simply type a test idea or objective, and Qyrus generates a runnable automated test in seconds. For example, typing “Login and apply for a loan” would yield a full test script with steps and locators. In live demos, teams achieved usable automated tests in under 2 minutes starting from a plain-English goal.

Qyrus alows allows testers to paste manual test case steps (plain text instructions) and have the AI convert them into executable automation steps. This bridges the gap between traditional test case documentation and automation, accelerating migration of manual test suites.

Democratizing Quality, Eradicating Maintenance

This accessibility empowers a broader range of team members to contribute to quality, but the platform’s biggest impact is on long-term maintenance. In stark contrast to a DIY approach, Qyrus tackles the most common points of failure head-on:

- AI-Powered Self-Healing: While a UI change in a Playwright script requires an engineer to manually hunt down and fix broken locators, Qyrus’s AI automatically detects these changes and heals the test in real-time, preventing failures and addressing the maintenance burden that can consume 70% of a QA team’s effort. Common test framework elements – variables, secret credentials, data sets, assertions – are built-in features, not custom add-ons.

- Built-in Visual Regression: Qyrus includes native visual testing to catch unintended UI changes by comparing screenshots. This ensures brand consistency and a flawless user experience—a critical capability that requires integrating a separate, often costly, third-party tool in a DIY stack.

- Cross-Platform Object Repository: Qyrus features a unified object repository, where a UI element is mapped once and reused across web and mobile tests. A single fix corrects the element everywhere, a stark contrast to the script-by-script updates required in a DIY framework.

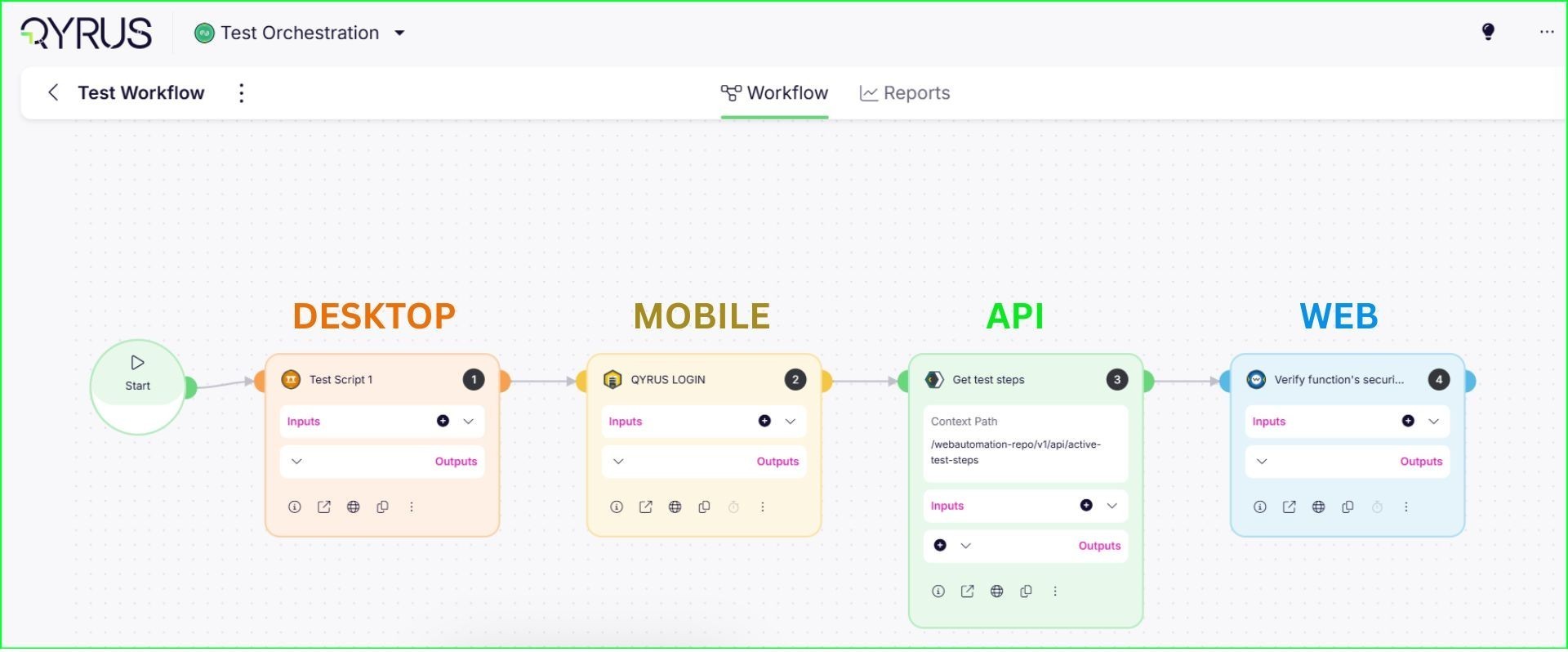

True End-to-End Orchestration, Zero Infrastructure Burden

Perhaps the most significant differentiator is the platform’s unified, multi-channel coverage. Qyrus was designed to orchestrate complex tests that span Web, API, and Mobile applications within a single, coherent flow. For example, Qyrus can generate a test that logs into a web UI, then call an API to verify back-end data, then continue the test on a mobile app – all in one flow. The platform provides a managed cloud of real mobile devices and browsers, removing the entire operational burden of setting up and maintaining a complex test grid.

Furthermore, every test result is automatically fed into a centralized, out-of-the-box reporting dashboard complete with video playback, detailed logs, and performance metrics. This provides immediate, actionable insights for the whole team, a stark contrast to a DIY approach where engineers must integrate and manage separate third-party tools just to understand their test results.

The Decision Framework: Qyrus vs. Playwright-MCP

Choosing the right path requires a clear-eyed assessment of the practical trade-offs. Here is a direct comparison across six critical decision factors.

1. Time-to-Value & Setup Effort

This measures how quickly each approach delivers usable automation.

- Qyrus: The platform is designed for immediate impact, with teams able to start creating AI-generated tests on day one. This acceleration is significant; one bank that adopted Qyrus cut its typical UAT cycle from 8–10 weeks down to just 3 weeks, driven by the platform’s ability to automate around 90% of their manual test cases.

- Playwright + MCP: This approach requires a substantial upfront investment before delivering value. The initial setup—which includes standing up the framework, configuring an MCP server, and integrating with CI pipelines—is estimated to take 4–6 person-months of engineering effort.

2. AI Implementation: Feature vs. Project

This compares how AI is integrated into the workflow.

- Qyrus: AI is treated as a turnkey feature and a “push-button productivity booster”. The AI behavior is pre-tuned, and the cost is amortized into the subscription fee.

- Playwright + MCP: Adopting AI is a DIY project. The team is responsible for hosting the MCP server, managing LLM API keys, crafting and maintaining prompts, and implementing guardrails to prevent errors. This distinction is best summarized by the observation: “Qyrus: AI is a feature. DIY: AI is a project”.

3. Technical Coverage & Orchestration

This evaluates the ability to test across different application channels.

- Qyrus: The platform was built for unified, multi-channel testing, supporting Web, API, and Mobile in a single, orchestrated flow. This provides one consolidated report and timeline for a complete end-to-end user journey.

- Playwright + MCP: Playwright is primarily a web UI automation tool. Covering other channels requires finding and integrating additional libraries, such as Appium for mobile, and then “gluing these pieces together” in the test code. This often leads to fragmented test suites and separate reports that must be correlated manually.

4. Total Cost of Ownership (TCO)

This looks beyond the initial price tag to the full long-term cost.

- Qyrus: The cost is a predictable annual subscription. While it involves a license fee, a Forrester analysis calculated a 213% ROI and a payback period of less than six months, driven by savings in labor and quality improvements.

- Playwright + MCP: The “open source is free like a puppy, not free like a beer” analogy applies here. The TCO is often 1.5 to 2 times higher than the managed solution due to ongoing operational costs, which include an estimated 1-2 full-time engineers for maintenance, infrastructure costs, and variable LLM token consumption.

Below is a cost comparison table for a hypothetical 3-year period, based on a mid-size team and application (assumptions detailed after):

| Cost Component | Qyrus (Platform) | DIY Playwright+MCP |

| Initial Setup Effort | Minimal – Platform ready Day 1; Onboarding and test migration in a few weeks (vendor support helps) | High – Stand up framework, MCP server, CI, etc. Estimated 4–6 person-months engineering effort (project delay) |

| License/Subscription | Subscription fee (cloud + support). Predictable (e.g. $X per year). | No license cost for Playwright. However, no vendor support – you own all maintenance. |

| Infrastructure & Tools | Included in subscription: browser farm, devices, reporting dashboard, uptime SLA. | Infra Costs: Cloud VM/container hours for test runners; optional device cloud service for mobile ($ per minute or monthly). Tool add-ons: e.g., monitoring, results dashboard (if not built in). |

| LLM Usage (AI features) | Included (Qyrus’s AI cost is amortized in fee). No extra charge per test generated. | Token Costs: Direct usage of OpenAI/Anthropic API by MCP. e.g., $0.015 per 1K output tokens . ($1 or less per 100 tests, assuming ~50K tokens total). Scales with test generation frequency. |

| Personnel (Maintenance) | Lower overhead: vendor handles platform updates, grid maintenance, security patches. QA engineers focus on writing tests and analyzing failures, not framework upkeep. | Higher overhead: Requires additional SDET/DevOps capacity to maintain the framework, update dependencies, handle flaky tests, etc. e.g., +1–2 FTEs dedicated to test platform and triage. |

| Support & Training | 24×7 vendor support included; faster issue resolution. Built-in training materials for new users. | Community support only (forums, GitHub) – no SLAs. Internal expertise required for troubleshooting (risk if key engineer leaves). |

| Defect Risk & Quality Cost | Improved coverage and reliability reduces risk of costly production bugs. (Missed defects can cost 100× more to fix in production) | Higher risk of gaps or flaky tests leading to escaped defects. Downtime or failures due to test infra issues are on you (potentially delaying releases). |

| Reporting & Analytics | Included: Centralized dashboard with video, logs, and metrics out-of-the-box. | Requires 3rd-party tools: Must integrate, pay for, and maintain tools like ReportPortal or Allure. |

Assumptions: This model assumes a fully-loaded engineer cost of $150k/year (for calculating person-month cost), cloud infrastructure costs based on typical usage, and LLM costs using current pricing (Claude Sonnet 4 or GPT-4 at ~$0.012–0.015 per 1K tokens output ). It also assumes roughly 100–200 test scenarios initially, scaling to 300+ over 3 years, with moderate use of AI generation for new tests and maintenance.

5. Maintenance, Scalability & Flakiness

This assesses the long-term effort required to keep the system running reliably.

- Qyrus: As a cloud-based SaaS, the platform scales elastically, and the vendor is responsible for infrastructure, patching, and uptime via an SLA and 24×7 support. Features like self-healing locators reduce the maintenance burden from UI changes.

- Playwright + MCP: The internal team becomes the de facto operations team for the test infrastructure. They are responsible for scaling CI runners, fixing issues at 2 AM, and managing flaky tests. Flakiness is a major hidden cost; one financial model shows that for a mid-sized team, investigating spurious test failures can waste over $150,000 in engineering time annually.

Below is a sensitivity table illustrating annual cost of maintenance under different assumptions. The maintenance cost is modeled as hours of engineering time wasted on flaky failures plus time spent writing/refactoring tests.

| Scenario | Authoring Speed (vs. baseline coding) | Flaky Test % | Estimated Extra Effort (hrs/year) | Impact on TCO |

| Status Quo (Baseline) | 1× (no AI, code manually) | 10% (high) | 400 hours (0.2 FTE) debugging flakes | (Too slow – not viable baseline) |

| Qyrus Platform | ~3× faster creation (assumed) | ~2% (very low) | 50 hours (vendor mitigates most) | Lowest labor cost – focus on tests, not fixes |

| DIY w/ AI Assist (Conservative) | ~2× faster creation | 5% (med) | 150 hours (self-managed) | Higher cost – needs an engineer part-time |

| DIY w/ AI Assist (Optimistic) | ~3× faster creation | 5% (med) | 120 hours | Still higher than Qyrus due to infra overhead |

| DIY w/o sufficient guardrails | ~2× faster creation | 10% (high) | 300+ hours (thrash on failures) | Highest cost – likely delays, unhappy team |

Assumes ~1000 test runs per year for a mid-size suite for illustration.

6. Team Skills & Collaboration

This considers who on the team can effectively contribute to the automation effort.

- Qyrus: The no-code interface ‘broadens the pool of contributors,’ allowing manual testers, business analysts, and developers to design and run tests. This directly addresses the industry-wide skills gap, where a staggering 42% of testing professionals report not being comfortable writing automation scripts.

- Playwright + MCP: The work remains centered on engineers with expertise in JavaScript or TypeScript. Even with AI assistance, debugging and maintenance require deep coding knowledge, which can create a bottleneck where only a few experts can manage the test suite.

The Security Equation: Managed Assurance vs. Agentic Risk

Utilizing AI agents in software testing introduces a new category of security and compliance risks. How each approach mitigates these risks is a critical factor, especially for organizations in regulated industries.

The DIY Agent Security Gauntlet

When you build your own AI-driven test system with a toolset like Playwright-MCP, you assume full responsibility for a wide gamut of new and complex security challenges. This is not a trivial concern; cybercrime losses, often exploiting software vulnerabilities, have skyrocketed by 64% in a single year. The DIY approach expands your threat surface, requiring your team to become experts in securing not just your application, but an entire AI automation system. Key risks that must be proactively managed include:

- Data Privacy & IP Leakage: Any data sent to an external LLM API—including screen text or form values—could contain sensitive information. Without careful prompt sanitization, there’s a risk of inadvertently leaking customer PII or intellectual property.

- Prompt Injection Attacks: An attacker could place malicious text on your website that, when read by the testing agent, tricks it into revealing secure information or performing unintended actions.

- Hallucinations and False Actions: LLMs can sometimes generate incorrect or even dangerous steps. Without strict, custom-built guardrails, a claude mcp agent might execute a sequence that deletes data or corrupts an environment if it misinterprets a command.

- API Misuse and Cost Overflow: A bug in the agent’s logic could cause an infinite loop of API calls to the LLM provider, racking up huge and unexpected charges. This requires implementing robust monitoring, rate limits, and budget alerts.

- Supply Chain Vulnerabilities: The system relies on a chain of open-source components, each of which could have vulnerabilities. A supply chain attack via a malicious library version could potentially grant an attacker access to your test environment.

The Managed Platform Security Advantage

A managed solution like Qyrus is designed to handle these concerns with enterprise-grade security, abstracting the risk away from your team. This approach is built on a principle of risk transference.

- Built-in Security & Compliance: Qyrus is developed with industry best practices, including data encryption, role-based access control, and comprehensive audit logging. The vendor manages compliance certifications (like ISO or SOC2) and ensures that all AI features operate within safe, sandboxed boundaries.

- Risk Transference: By using a proven platform, you transfer certain operational and security risks to the vendor. The vendor’s core business is to handle these threats continuously, likely with more dedicated resources than an internal team could provide.

- Guaranteed Uptime and Support: Uptime, disaster recovery, and 24×7 support are built into the Service Level Agreement (SLA). This provides an assurance of reliability that a DIY system, which relies on your internal team for fixes, cannot offer. The financial value of this guarantee is immense, as 91% of enterprises report that a single hour of downtime costs them over $300,000. Qyrus transfers uptime and patching risk out of your team; DIY puts it squarely back.

Conclusion: Making the Right Choice for Your Team

After a careful, head-to-head analysis, the evidence shows two valid but distinctly different paths for achieving AI-powered test automation. The decision is not simply about technology; it is about strategic alignment. The right choice depends entirely on your team’s resources, priorities, and what you believe will provide the greatest competitive advantage for your business.

To make the decision, consider which of these profiles best describes your organization:

- Choose the “Build” path with Playwright-MCP if: Your organization has strong in-house engineering talent, particularly SDETs and DevOps specialists who are prepared to invest in building and maintaining a custom testing platform. This path is ideal for teams that require deep, bespoke customization, want to integrate with a specific developer ecosystem like Azure and GitHub, and value the ultimate control that comes from owning their entire toolchain.

- Choose the “Buy” path with Qyrus if: Your primary goals are speed, predictable cost, and broad test coverage out of the box. This approach is the clear winner for teams that want to accelerate release cycles immediately, empower non-technical users to contribute to automation, and transfer operational and security risks to a vendor. If your goal is to focus engineering talent on your core product rather than internal tools, the financial case is definitive: a commissioned Forrester TEI study found that an organization using Qyrus achieved a 213% ROI, a $1 million net present value, and a payback period of less than six months.

Ultimately, maintaining a custom test framework is likely not what differentiates your business. If you remain on the fence, the most effective next step is a small-scale pilot with Qyrus. Implement a bake-off for a limited scope, automating the same critical test scenario in both systems.